v0.2.0- _CORE

-

CoreTypes

▸

- Static members

- .addCoreType

- .getCoreType

-

Decoder

▸

- Static members

- .isBinary

- Filter ▸

-

Image2D

▸

- Static members

- .TYPE

- Instance members

- #clone

- #computeSimpleStat

- #float32Clone

- #get1dIndexFrom2dPosition

- #get2dPositionFrom1dIndex

- #getAvg

- #getComponentsPerPixel

- #getData

- #getDataAsFloat32Array

- #getDataAsUInt8Array

- #getDataCopy

- #getHeight

- #getMax

- #getMin

- #getNcpp

- #getPixel

- #getSegmentSample

- #getWidth

- #hollowClone

- #isInside

- #setData

- #setPixel

-

Image3D

▸

- Static members

- .TYPE

- Instance members

- #addTransformation

- #clone

- #getData

- #getDataCopy

- #getDataUint8

- #getDimensionIndexFromName

- #getDimensionSize

- #getMaxValue

- #getMinValue

- #getNumberOfSlices

- #getPositionFromTransfoSpaceToVoxelSpace

- #getPositionFromVoxelSpaceToTransfoSpace

- #getPositionWorldToVoxel

- #getSegmentSampleTransfoSpace

- #getSegmentSampleVoxelSpace

- #getSlice

- #getSliceSize

- #getTimeLength

- #getTransfoBox

- #getTransformMatrix

- #getTransfoVolumeCorners

- #getVoxel

- #getVoxelBox

- #getVoxelCoordinatesSwapMatrix

- #getVoxelSafe

- #getVoxelTransfoSpace

- #getW2VMatrixSwapped

- #hasTransform

- #isInsideTransfoSpace

- #isInsideVoxelSpace

- #resetData

- #setData

- #setVoxel

- #setVoxelTransfoSpace

-

ImageToImageFilter

▸

- Instance members

- #hasSameNcppInput

- #hasSameSizeInput

-

LineString

▸

- Static members

- .TYPE

-

Mesh3D

▸

- Static members

- .TYPE

- Instance members

- #buildBox

- #buildBvhTree

- #buildTriangleList

- #buildTriangleList

- #getBox

- #getBoxCenter

- #getNumberOfComponentsPerColor

- #getNumberOfVertices

- #getNumberOfVerticesPerShapes

- #getPolygonFacesNormals

- #getPolygonFacesNormalsCopy

- #getPolygonFacesOrder

- #getPolygonFacesOrderCopy

- #getVertexColors

- #getVertexColorsCopy

- #getVertexPositionCopy

- #getVertexPositions

- #intersectBox

- #intersectRay

- #intersectSphere

- #isInside

- #setNumberOfComponentsPerColor

- #setNumberOfVerticesPerShapes

- #setPolygonFacesNormals

- #setPolygonFacesOrder

- #setVertexColors

- #setVertexPositions

- #updateColorTriangle

-

PixpipeContainer

▸

- Instance members

- #setRawData

-

PixpipeContainerMultiData

▸

- Instance members

- #checkIntegrity

- #clone

- #doesDataExist

- #getData

- #getDataCopy

- #getDataIndex

- #setData

-

PixpipeObject

▸

- Static members

- .TYPE

-

Signal1D

▸

- Instance members

- #clone

- #dataToString

- #getData

- #hollowClone

- #setData

- #toString

- _DECODER

- AllFormatsGenericDecoder

- EdfDecoder

- GenericDecoderInterface

- Image2DGenericDecoder

- Image3DGenericDecoder

- JpegDecoder

- Mesh3DGenericDecoder

- MghDecoder

-

Minc2Decoder

▸

- Instance members

- #isRgbVolume

- #rgbVoxels

- #scaleVoxels

-

MniObjDecoder

▸

- Static members

- .isBinary

- NiftiDecoder

- PixBinDecoder

-

PixBinEncoder

▸

- Static members

- .MAGIC_NUMBER

- PixpDecoder

-

PixpEncoder

▸

- Instance members

- #download

-

PngDecoder

▸

- Instance members

- #_isPng

- Signal1DGenericDecoder

- TiffDecoder

- _FILTER

- ApplyColormapFilter

- BandPassSignal1D

- ContourHolesImage2DFilter

- ContourImage2DFilter

- CropImageFilter

- DifferenceEquationSignal1D

- FloodFillImageFilter

-

ForEachPixelImageFilter

▸

- Instance members

- #_run

- ForEachPixelReadOnlyFilter

- GradientImageFilter

- HighPassSignal1D

- IDWSparseInterpolationImageFilter

- Image3DToMosaicFilter

- ImageBlendExpressionFilter

- ImageDerivativeFilter

- LowPassFreqSignal1D

- LowPassSignal1D

- Mesh3DToVolumetricHullFilter

- MultiplyImageFilter

- NaturalNeighborSparseInterpolationImageFilter

- NearestNeighborSparseInterpolationImageFilter

- NormalizeImageFilter

- PatchImageFilter

- SimpleThresholdFilter

- SimplifyLineStringFilter

- SpatialConvolutionFilter

-

SpectralScaleImageFilter

▸

- Instance members

- #_run

- TerrainRgbToElevationImageFilter

- TriangulationSparseInterpolationImageFilter

- _HELPER

-

AngleToHueWheelHelper

▸

- Instance members

- #_hsl2Rgba

- ColorScales

-

Colormap

▸

- Static members

- .getAvailableStyles

- .TYPE

-

LineStringPrinterOnImage2DHelper

▸

- Instance members

- #addLineString

- _IO

- BrowserDownloadBuffer

-

CanvasImageWriter

▸

- Instance members

- #_run

- #getCanvas

-

FileImageReader

▸

- Instance members

- #_run

- #hasValidInput

- FileToArrayBufferReader

-

UrlImageReader

▸

- Instance members

- #_run

- UrlToArrayBufferReader

- _UTILS

-

FunctionGenerator

▸

- Static members

- .gaussianFrequencyResponse

- .gaussianFrequencyResponseSingle

- MatrixTricks ▸

- _misc

- convertImage3DMetadata

- swapn

- transformToMinc

- ForwardFourierImageFilter

- ignore_offsets

- InverseFourierImageFilter

_CORE

The core of Pixpipe is a list of the base classes that most of Pixpipe objects will inherit from. Some are supposed to be used extensively by developers (Signal1D, Image2D, Image3D) and some are just fundamental parts that work in the shadow (Filter, PixpipeContainer, PixpipeObject).

Make sure you understand who is who before you start.

CoreTypes

CoreTypes is bit of an exception in Pixpipejs because it does not inherit from PixpipeObject and it contains only static methods. In a sens, it's comparable to a singleton that stores all the core types constructors of Pixpipe so that they can be retrived only by querying their name.

At the creation of a new type, the static method .addCoreType() should be

called right after the closing curly bracket of the class declaration.

This is if we want to reference this class as a core type.

[STATIC] Adds a new type to the collection of core types. This is used when we want to retrieve a type and instanciate an object of this type using its constructor name.

(Class)

the class of the type

Decoder

The class Decoder is an interface and is not supposed to be used as-is.

The file decoderd (in the decoder folder) must inherit from this class because

it implements some handy logic, for example the static function isBinary to tell if

the associated format is binary or text based. In addition, it has a method to

convert a utf8 binary buffer into a utf8 string. This is usefull in case the

associated filetype is text based but the given buffer (of a valid file) is encoded

in a binary way.

The metadata targetType must be overwritten with a string matching one of the

Pixpipe type (e.g. "Image3DAlt");

Extends Filter

Filter

Filter is a base class and must be inherited to be used properly. A filter takes one or more Image instances as input and returns one or more instances of images as output. Every filter has a addInput(), a getOutput() and a update() methods. Every input and output can be arranged by category, so that internaly, a filter can use and output diferent kind of data.

Usage

Extends PixpipeObject

Hardcode the datatype

Remove all the inputs given so far.

Validate the input data using a model defined in _inputValidator. Every class that implement Filter must implement their own _inputValidator. Not mandatory to use, still a good practice.

Defines a callback. By defautl, no callback is called.

(any)

(any)

Same as PixpipeObject.setMetadata but add the _isOutputReady to false.

(any)

(any)

MUST be implemented by the class that inherit this. Launch the process.

Image2D

Image2D class is one of the few base element of Pixpipejs. It is always considered to be 4 channels (RGBA) and stored as a Float32Array typed array.

Usage

Extends PixpipeContainer

(Object

= null)

if present:

- options.width {Number} width in pixel

- options.height {Number} height in pixel

- options.color {Array} can be [r, g, b, a] or just [i]. Optional.

Hardcode the datatype

Compute "min" "max" and "avg" and store them in metadata

Get the internal image data (pointer)

TypedArray:

the original data (most likely a Float32Array), dont mess up with this one.

in case of doubt, use getDataCopy()

Get a copy of the data but forced as Float 32 (no scaling is done)

Float32Array:

the casted array

No matter the original type of the internal data, scale it into a [0, 255] uInt8Array

Uint8Array:

scaled data

Get a copy of the data

TypedArray:

a deep copy of the data (most likely a Float32Array)

Sample the color along a segment

(Object)

ending position of type {x: Number, y: Number}

(any)

Object:

array of Array like that: {

positions:

[

{x: x0, y: y0},

{x: x1, y: y1},

{x: x2, y: y2},

...

]

,

labels:

[

"(x0, y0)", "(x1, y1)", "(x2, y2)", ...

]

,

colors: [

[

r0, r1, r2 ...

]

,

[

g0, g1, g2 ...

]

,

[

b0, b1, b2 ...

]

]

}

return null if posFrom or posTo is outside

Set the data to this Image2D.

(Float32Array)

1D array of raw data stored as RGBARGBA...

(Number)

width of the Image2D

(Number)

height of the Image2D

(Number

= 4)

number of components per pixel (default: 4)

(Boolean

= false)

if true, a copy of the data is given, if false we jsut give the pointer

Image3D

Image3D class is one of the few base element of Pixpipejs. It is always considered to be 4 channels (RGBA) and stored as a Float32Array typed array.

Extends PixpipeContainer

(Object

= null)

may contain the following:

- options.xSize {Number} space length along x axis

- options.ySize {Number} space length along y axis

- options.zSize {Number} space length along z axis

- options.tSize {Number} space length along t axis (time)

- options.ncpp {Number} number of components per pixel. Default = 1 If at least xSize, ySize and zSize are specified, a buffer is automatically initialized with the value 0.

Hardcode the datatype

Float32Array:

the original data, dont mess up with this one.

in case of doubt, use getDataCopy()

Float32Array:

a deep copy of the data

Get data scaled as a uint8 taking in consideration the actual min-max range of the data (and not the possible min-max rage allowed by the data type) Notice: values are rounded

Uint8Array:

the scaled data

Convert coordinates from a a given (non-voxel based) position into a voxel based coord

(Object)

a non-voxel based coordinate as {x: Number, y: Number, z: Number}

(String)

name of the transformation to use, "*2v"

(Boolean

= true)

round to the closest voxel coord integer (default: true)

Object:

coordinates {i: Number, j: Number, k: Number} in the space coorinate given in argument

Convert a position from voxel coordinates to another space

(Object)

voxel coordinates like {i: Number, j: Number, k: Number} where i is the slowest varying and k is the fastest varying

(String)

name of a transformation registered in the metadata as a child property of "transformations", "v2*"

Object:

coordinates {x: Number, y: Number, z: Number} in the space coorinate given in argument

If the matrix 'w2v' exist in the available transformations, this method gives the voxel position {i, j, k} corresponding to the given world position {x, y, z}. Since voxel coordinates are integers, the result is rounded by deafult but this can be changed by providing false to the 'round' arg.

(Object

= {x:0,y:0,z:0})

position in world coordinates (default: origin)

(Boolean

= true)

do you wish to round the output? (default: true)

Object:

the corresponding position in voxel coorinate as {i: Number, j: Number, k: Number}

Sample voxels along a segment in a transform coordinates system (world or subject). This is achieved by converting the transformed coordinates into voxel coordinates, then samples are taken respecting a voxel unit rather than the transform unit so that it is more fine.

(String)

id of a registered transformation that goes from arbitrary space to voxel space (aka. "*2v")

(String)

id of a registered transformation that goes from voxel space to arbitrary space (aka. "v2*" or the inverse of space2voxelTransformName)

(Object)

starting sampling point in transformed coordinates (world or subject) as {x: Number, y: Number, z: Number}

(Object)

end sampling point in transformed coordinates (world or subject) as {x: Number, y: Number, z: Number}

(Number

= 0)

time sample index to sample (default: 0)

Object:

array of Array like that: {

positions:

[

{x: x0, y: y0, z: z0},

{x: x1, y: y1, z: z1},

{x: x2, y: y2, z: z2},

...

]

,

labels:

[

"(x0, y0, z0)", "(x1, y1, z1)", "(x2, y2, z2)", ...

]

,

colors: [

[

r0, r1, r2 ...

]

,

[

g0, g1, g2 ...

]

,

[

b0, b1, b2 ...

]

]

}

return null if posFrom or posTo is outside

Sample the color along a segment

(Object)

ending position of type {i: Number, j: Number, k: Number}

(any)

(any

= 0)

Object:

array of Array like that: {

positions:

[

{i: i0, j: j0, k: k0},

{i: i1, j: j1, k: k1},

{i: i2, j: j2, k: k2},

...

]

,

labels:

[

"(i0, j0, k0)", "(i1, j1, k1)", "(i2, j2, k2)", ...

]

,

colors: [

[

r0, r1, r2 ...

]

,

[

g0, g1, g2 ...

]

,

[

b0, b1, b2 ...

]

]

}

return null if posFrom or posTo is outside

Get the size (width and height) of a slice along a given axis

Object:

width and height as an object like {w: Number, h: Number};

Get the space box in a the given transform space coordinates. Due a possible rotation

involved in a affine transformation, the box will possibly have some void space on the sides.

To get the actual volume corners in a transfo space, use the method getTransfoVolumeCorners().

(String)

id of a registered transformation that goes from voxel space to arbitrary space (aka. "v2*")

Object:

Box of shape {min: {x:Number, y:Number, z:Number, t:Number}, max: {x:Number, y:Number, z:Number, t:Number} }

Get the transformation matrix (as a copy) with a given name. The name can be "v2w", "w2v" or any other custom transfo name specified earlier.

(String)

name of the transformation.

Float32Array:

a copy (slice) of the transformation matrix, of null if not existant. This matrix is column-major (like OpenGL/WebGL, but unlike ThreeJS/BabylonJS)

Get the voxel value from a voxel position (in a voxel-coordinate sytem) with NO regards towards how the data is supposed to be read. In other word, dimension.direction is ignored.

(Object)

3D position like {i, j, k}, i being the fastest varying, k being the slowest varying

(Number

= 0)

position along T axis (time dim, the very slowest varying dim when present)

Number:

the value at a given position.

For external use (e.g. in a shader). Get the matrix for swapping voxel coordinates before converting to world coord or after having converted from world. To serve multiple purposes, this method can output a 3x3 matrix (default case) or it can output a 4x4 affine transform matrix with a weight of 1 at its bottom-right position. This matrix can be used in two cases:

- swap [i, j, k] voxel coordinates before multiplying them by a "v2*" matrix

- swap voxel coordinates after multiplying [x, y, z] world coorinates by a "*2v" matrix

(Boolean

= true)

if true, horizontally flip the swap matrix. We usualy need to horizontaly flip this matrix because otherwise the voxel coordinates will be given as kji instead of ijk.

(Boolean

= false)

optional, output a 4x4 if true, or a 3x3 if false (default: false)

Array:

the 3x3 matrix in a 1D Array

[

9

]

arranged as column-major

[DON'T USE] Get a voxel value at a given position with regards of the direction the data are supposed to be read. In other word, dimension.step is taken into account.

Get a value from the dataset using {x, y, z} coordinates of a transformed space. Keep in mind world (or subject) are floating point but voxel coordinates are integers. This does not perform interpolation.

(String)

name of the affine transformation "*2v" - must exist

(Object)

non-voxel-space 3D coordinates, most likely world coordinates {x: Number, y: Number, z: Number}

(Number

= 0)

Position on time (default: 0)

Number:

value at this position

Get the w2v (world to voxel) transformation matrix (if it exists) in its swapped version. Most of the time, the swap matrix is an identity matrix, so it won't change anything to have it swapped, but some files dont respect the NIfTI spec regarding the dimensionality of the volume.

Array:

the 4x4 matrix (column major)

Is the given point in a transform coordinates system (world or subject) inside the dataset? This is achieved by converting the transformed coordinates into voxel coordinates.

Tells whether or not the given position is within the boundaries or the datacube. This works with voxel coordinates ijk

(Object)

Voxel coordinates as {i: Number, j: Number, k: Number}

where i is along the slowest varying dimension and k is along the fastest.

Boolean:

true if inside, false if outside

Initialize this with some data and appropriate size

(Float32Array)

the raw data

(Object)

may contain the following:

- options.xSize {Number} space length along x axis

- options.ySize {Number} space length along y axis

- options.zSize {Number} space length along z axis

- options.tSize {Number} space length along t axis (time)

- options.ncpp {Number} number of components per pixel. Default = 1

- options.deepCopy {Boolean} perform a deep copy if true. Simple association if false

Set the value of a voxel

Get a value from the dataset using {x, y, z} coordinates of a transformed space. Keep in mind world (or subject) are floating point but voxel coordinates are integers. This does not perform interpolation.

ImageToImageFilter

ImageToImageFilter is not to be used as-is but rather as a base class for any filter that input a single Image2D and output a single Image2D. This class does not overload the update() method.

Extends Filter

LineString

A LineString is a vectorial reprensation of a line or polyline, open or closed.

When closed, it can be considered as a polygon.

By default, a LineString is 2 dimensional but the dimension can be changed when

using the .setData(...) method or before any point addition with .setNod().

To close a LineString, use .setMetadata("closed", true);, this will not add

any point but will flag this LineString as "closed".

Extends PixpipeContainer

Hardcode the datatype

Mesh3D

A Mesh3D object contains the necessary informations to create a 3D mesh (for example using ThreeJS) and provide a generic datastructure so that it can accept data from arbitrary mesh file format.

Usage

- examples/fileToMniObj.html

- examples/meshInsideOutside.html

- examples/meshInsideOutsideCube.html

- examples/meshInsideOutsideSphere.html

Extends PixpipeContainerMultiData

Hardcode the datatype

Find the axis aligned bounding box of the mesh. Stores it in this._aabb

Builds the Bounding Volume Hierarchy tree. Stores it in this._bvhTree

Build the list of triangles

Build the list of triangles

Get all polygon faces normal (unit) vectors (a pointer to)

TypedArray:

the vertex positions

Get a copy of polygon faces normal (unit) vectors

TypedArray:

the vertex positions (deep copy)

Get all polygon faces

TypedArray:

the vertex positions

Get a copy of polygon faces

TypedArray:

the vertex positions (deep copy)

Get all vertex colors (a pointer to)

TypedArray:

the vertex positions

Get a copy of vertex colors

TypedArray:

the vertex positions (deep copy)

Get a copy of the vertex positions

TypedArray:

the vertex positions (deep copy)

Get all the vertex positions (a pointer to)

TypedArray:

the vertex positions

performs the intersection between a the mesh and a box to retrieve the list of triangles that are in the box. A triangle is considered as "in the box" when all its vertices are in the box.

(Object)

the box to test the intersection on as {min:

[

x, y, z

]

, max:

[

x, y, z

]

}

Array:

all the intersections or null if none

Perform an intersection between a given ray and the mesh

(Array)

starting point of the ray as

[

x, y, z

]

(Array)

normal vector of the ray direction as

[

x, y, z

]

(Boolean

= false)

true: ignore triangles with a normal going the same dir as the ray, false: considere all triangles

Array:

all the intersections or null if none

performs the intersection between a the mesh and a sphere to retrieve the list of triangles that are in the sphere. A triangle is considered as "in the sphere" when all its vertices are in the sphere.

(Object)

the sphere to test the intersection with as {center:

[

x, y, z

]

, radius:Number}

Array:

all the intersections or null if none

Set the array of polygon faces normal (unit) vectors

(TypedArray)

array of index of vertex positions index

Set the array of polygon faces

(TypedArray)

array of index of vertex positions index

Set the array of vertex colors

(TypedArray)

array of index of vertex color as

[

r, g, b, r, g, b, etc.

]

or

[

r, b, g, a, etc.

]

Set the array of vertex positions

(TypedArray)

array vertex positions (does not perform a deep copy). The size of this array must be a multiple of 3

PixpipeContainer

PixpipeContainer is a common interface for Image2D and Image3D (and possibly some other future formats). Should not be used as-is.

Extends PixpipeObject

Associate d with the internal data object by pointer copy (if Object or Array)

(TypedArray)

pixel or voxel data. If multi-band, should be rgbargba...

PixpipeContainerMultiData

PixpipeContainerMultiData is a generic container very close from PixpipeContainer (from which it inherits). The main diference is that an instance of PixpipeContainerMultiData can contain multiple dataset since the _data property is an Array. This is particularly convenient when storing large arrays of numbers that must be split in multiple collections such as meshes (a typed array for vertices positions, another typed array for grouping as triangle, another one for colors, etc.) The class PixpipeContainerMultiData should not be used as-is and should be iherited by a more specific datastructure.

Extends PixpipeContainer

Get a deep copy clone of this object. Works for classes that ihnerit from PixpipeContainerMultiData. Notice: the sub-datasets will possibly be ina different order, but with an index that tracks them properly. In other word, not the same order but not an issue.

PixpipeContainerMultiData:

a clone.

Get a sub-dataset given its name. Notice: This gives a pointer, not a copy. Modifying the returns array will affect this object.

(String)

name of the sub-dataset

TypedArray:

a pointer to the typed array of the sub-dataset

Get a copy of a sub-dataset given its name. Notice: This gives a copy of a typed array. Modifying the returns array will NOT affect this object.

(String)

name of the sub-dataset

TypedArray:

a copy of the typed array of the sub-dataset

Associate d with the internal sub-dataset by pointer copy (if Object or Array). A name is necessary so that the internal structure can indentify the sub-dataset, to process it of to retrieve it.

(TypedArray)

array of data to associate (not a deep copy)

(String

= null)

name to give to thissub-dataset

PixpipeObject

PixpipeObject is the base object of all. It creates a uuid and has few generic attributes like type, name and description. Not all these attributes always useful;

Acces it like a static attribute. Must be overloaded.

Called just after metadata were raw-copied. Useful to perform checkings and pre processing. To be overwriten

Copy all the metadata from the object in argument to this. A deep copy by serialization is perform. The metadata that exist only in this are kept.

(PixpipeObject)

the object to copy metadata from

Get a clone of the _metadata object. Deep copy, no reference in common.

Return a copy of the uuid

Signal1D

An object of type Signal1D is a single dimensional signal, most likely

by a Float32Array and a sampling frequency. To change the sampling frequency

use the method .setMetadata('samplingFrequency', Number);, defaut value is 100.

We tend to considere this frequency to be in Hz, but there is no hardcoded

unit and it all depends on the application. This is important to specify this

metadata because some processing filters may use it.

Usage

Extends PixpipeContainer

Get the raw data as a typed array

Float32Array:

the data, NOT A COPY

Set the data.

(Float32Array)

the data

(Boolean

= false)

true: will perform a deep copy of the data array, false: will just associate the pointer

_DECODER

All decoders inherit from Filter, they intend to parse specific file format and output one of the basic PixpipeObject. In addition to decoders, you can also find some encoders (PixBinEncoder).

A decoder will usually take an ArrayBuffer as input.

AllFormatsGenericDecoder

AllFormatsGenericDecoder is a generic decoder for all the file formats Pixpipe

can handle. This means an instance of AllFormatsGenericDecoder can output

object of various modality: a Image3D, Image2D, Signal1D or a Mesh3D.

As any generic decoder, this one performs attemps of decoding and if it suceeds,

an object is created. Some of the compatible formats do not have a easy escape

like a magic number checking and thus need a full decoding attemps before the

decoder can take a decision if wether or not the buffer being decoded matches

the such or such decoder. This can create a bottel neck and we advise not to use

AllFormatsGenericDecoder if you know your file will be of a specific type or

of a specific modality.

Notice: at the moment, AllFormatsGenericDecoder does not decode the pixBin format.

Extends GenericDecoderInterface

EdfDecoder

An instance of EdfDecoder takes an ArrayBuffer as input. This ArrayBuffer must come from a edf file (European Data Format). Such file can have multiple signals encoded internally, usually from different sensors, this filter will output as many Signal1D object as there is signal in the input file. In addition, each signal is composed of records (e.g. 1sec per record). This decoder concatenates records to output a longer signal. Still, the metadata in each Signal1D tells what the is the length of original record.

Usage

Extends Decoder

GenericDecoderInterface

GenericDecoderInterface is an intreface and should not be used as is.

GenericDecoderInterface provides the elementary kit ti build a multiformat decoder.

Classes that implements GenericDecoderInterface must have a list of decoder constructors

stored in this._decoders.

The classes Image2DGenericDecoder, Image3DGenericDecoderAlt and Mesh3DGenericDecoder

are using GenericDecoderInterface.

Extends Decoder

Image2DGenericDecoder

This class implements GenericDecoderInterface that already contains the

successive decoding logic. For this reason this filter does not need to have the

_run method to be reimplemented.

An instance of Image2DGenericDecoder takes a ArrayBuffer

as input 0 (.addInput(myArrayBuffer)) and output an Image2D.

The update method will perform several decoding attempts, using the readers

specified in the constructor.

In case of success (one of the registered decoder was compatible to the data)

the metadata decoderConstructor and decoderName are made accessible and give

information about the file format. If no decoder managed to decode the input buffer,

this filter will not have any output.

Developers: if a new 2D dataset decoder is added, reference it here and in the import list

Usage

Extends GenericDecoderInterface

Image3DGenericDecoder

This class implements GenericDecoderInterface that already contains the

successive decoding logic. For this reason this filter does not need to have the

_run method to be reimplemented.

An instance of Image3DGenericDecoder takes a ArrayBuffer

as input 0 (.addInput(myArrayBuffer)) and output an Image3D.

The update method will perform several decoding attempts, using the readers

specified in the constructor.

In case of success (one of the registered decoder was compatible to the data)

the metadata decoderConstructor and decoderName are made accessible and give

information about the file format. If no decoder managed to decode the input buffer,

this filter will not have any output.

Developers: if a new 3D dataset decoder is added, reference it here.

Usage

Extends GenericDecoderInterface

JpegDecoder

An instance of JpegDecoder will decode a JPEG image in native Javascript and

output an Image2D. This is of course slower than using io/FileImageReader.js

but this is compatible with Node and not rely on HTML5 Canvas.

Usage

Extends Decoder

Mesh3DGenericDecoder

This class implements GenericDecoderInterface that already contains the

successive decoding logic. For this reason this filter does not need to have the

_run method to be reimplemented.

An instance of Mesh3DGenericDecoder takes a ArrayBuffer

as input 0 (.addInput(myArrayBuffer)) and output a Mesh3D object.

The update method will perform several decoding attempts, using the readers

specified in the constructor.

In case of success (one of the registered decoder was compatible to the data)

the metadata decoderConstructor and decoderName are made accessible and give

information about the file format. If no decoder managed to decode the input buffer,

this filter will not have any output.

Developers: if a new format decoder is added, reference it here.

Usage

Extends GenericDecoderInterface

MghDecoder

Decodes a MGH file.

Takes an ArrayBuffer as input (0) and output a Image3D

Some doc can be found here

Usage

Extends Decoder

Minc2Decoder

Decode a HDF5 file, but is most likely to be restricted to the features that are

used for Minc2 file format.

The metadata "debug" can be set to true to

enable a verbose mode.

Takes an ArrayBuffer as input (0) and output a Image3D

Usage

Extends Decoder

A MINC volume is an RGB volume if all three are true: 1. The voxel type is unsigned byte. 2. It has a vector_dimension in the last (fastest-varying) position. 3. The vector dimension has length 3.

(object)

The header object representing the structure

of the MINC file.

(object)

The typed array object used to represent the

image data.

boolean:

True if this is an RGB volume.

This function copies the RGB voxels to the destination buffer. Essentially we just convert from 24 to 32 bits per voxel. This is another MINC-specific function.

(object)

The 'link' object created using createLink(),

that corresponds to the image within the HDF5 or NetCDF file.

object:

A new ArrayBuffer that contains the original RGB

data augmented with alpha values.

Convert the MINC data from voxel to real range. This returns a new buffer that contains the "real" voxel values. It does less work for floating-point volumes, since they don't need scaling.

For debugging/testing purposes, also gathers basic voxel statistics, for comparison against mincstats.

(object)

The link object corresponding to the image data.

(object)

The link object corresponding to the image-min

data.

(object)

The link object corresponding to the image-max

data.

(object)

An array of exactly two items corresponding

to the minimum and maximum valid

raw

voxel values.

(boolean)

True if we should print debugging information.

any:

A new ArrayBuffer containing the rescaled data.

MniObjDecoder

When most parser need an ArrayBuffer as input, the MNI OBJ mesh file being text

files, an instance of MniObjDecoder takes the string content of such files.

The string content of a file can be provided by a FileToArrayBufferReader or

UrlToArrayBufferReader with the metadata readAsText being true.

Then use the method .addInput( myString ) to provide the input and call

the method .update(). If the input is suscceessfully parsed, the output of

a MniObjDecoder is a Mesh3D. If the file is invalid, a message is probably written

in the JS console and no output is available.

Usage

Extends Decoder

NiftiDecoder

Important information: NIfTI dataset are using two indexing methods:

- A voxel based system (i, j, k), the most intuitive, where i is the fastest varying dim and k is the sloest varying dim. Thus for a given (i, j, k) the value is at (i + jdim[1] + kdim[1]*dim[2])

- A subject based system (x, y, z), where +x is right, +y is anterior, +z is superior (right handed coord system). This system is CENTER pixel/voxel and is the result of a transformation from (i, j, k) and a scaling given by the size of each voxel in a world unit (eg. mm)

NIfTI provides three alternatives to characterize this transformation:

METHOD 1 , when header.qform_code = 0 Here, no specific orientation difers in [x, y, z], only spatial scaling based on voxel world dimensions. This method is NOT the default one, neither it is the most common. It is mainly for bacward compatibility to ANALYZE 7.5. Thus we simply have: x = pixdim[1] i y = pixdim[2] j z = pixdim[3] * k

METHOD 2, the "normal" case, when header.qform_code > 0 In this situation, three components are involved in the transformation: 1. voxel dimensions (header.pixDims[]) for the spatial scaling 2. a rotation matrix, for orientation 3. a shift Thus, we have: x header.pixDims[1] * i [ y ] = [ R21 R22 R23 ] header.pixDims[2] j ] + header.qoffset_y [ R31 R32 R33 ] qfac header.pixDims[3] * k ] [ header.qoffset_z ] Info: The official NIfTI header description ( https://nifti.nimh.nih.gov/pub/dist/src/niftilib/nifti1.h ) was used to interpret the data.

Extends Decoder

PixBinDecoder

A PixBinDecoder instance decodes a pixbin file and output an Image2D or Image3D.

The input, specified by .addInput(...) must be an ArrayBuffer

(from an UrlToArrayBufferFilter, an UrlToArrayBufferReader or anothrer source ).

The metadata targetType can be a string or an array of

string being the name(s) of the accepted contructor(s). The defaut value ("*")

means the decoder will decode blocks of the pixBin file that are of any type. If

the you decide to use myPixBinDecoder.setMetadata("targetType", ["Image2D", "Mesh3D"]);

then the block being something else will bne skiped and not part of the outputs.

Usage

Extends Decoder

PixBinEncoder

A PixBinEncoder instance takes an Image2D or Image3D as input with addInput(...)

and encode it so that it can be saved as a *.pixp file.

An output filename can be specified using .setMetadata("filename", "yourName.pixp");,

by default, the name is "untitled.pixp".

When update() is called, a gzip blog is prepared as output[0] and can then be downloaded

when calling the method .download(). The gzip blob could also be sent over AJAX

using a third party library.

Usage

Extends Filter

[static] the first sequence of bytes for a pixbin file is this ASCII string

PixpDecoder

A PixpDecoder instance decodes a *.pixp file and output an Image2D or Image3D.

The input, specified by .addInput(...) must be an ArrayBuffer

(from an UrlToArrayBufferFilter, an UrlToArrayBufferReader or anothrer source ).

Usage

Extends Decoder

PixpEncoder

A PixpEncoder instance takes an Image2D or Image3D as input with addInput(...)

and encode it so that it can be saved as a *.pixp file.

An output filename can be specified using .setMetadata("filename", "yourName.pixp");,

by default, the name is "untitled.pixp".

When update() is called, a gzip blog is prepared as output[0] and can then be downloaded

when calling the method .download(). The gzip blob could also be sent over AJAX

using a third party library.

Usage

Extends Filter

Download the generated file

PngDecoder

An instance of PngDecoder will decode a PNG image in native Javascript and

output an Image2D. This is of course slower than using io/FileImageReader.js

but this is compatible with Node and not rely on HTML5 Canvas.

Usage

Extends Decoder

Checks if the input buffer is of a png file

(ArrayBuffer)

an array buffer inside which a PNG could be hiding!

Boolean:

true if the buffer is a valid PNG buffer, false if not

Signal1DGenericDecoder

This class implements GenericDecoderInterface that already contains the

successive decoding logic. For this reason this filter does not need to have the

_run method to be reimplemented.

An instance of Signal1DGenericDecoder takes a ArrayBuffer

as input 0 (.addInput(myArrayBuffer)) and output an Signal1D.

The update method will perform several decoding attempts, using the readers

specified in the constructor.

In case of success (one of the registered decoder was compatible to the data)

the metadata decoderConstructor and decoderName are made accessible and give

information about the file format. If no decoder managed to decode the input buffer,

this filter will not have any output.

Developers: if a new 2D dataset decoder is added, reference it here and in the import list

Usage

Extends GenericDecoderInterface

TiffDecoder

Read and decode Tiff format. The decoder for BigTiff is experimental. Takes an ArrayBuffer of a tiff file as input and the TiffDecoder outputs an Image2D. Tiff format is very broad and this decoder, thanks to the Geotiff npm package is compatible with single or multiband images, with or without compression, using various bith depth and types (8bits, 32bits, etc.)

Info: Tiff 6.0 specification http://www.npes.org/pdf/TIFF-v6.pdf

Usage

Extends Decoder

_FILTER

Filters are objects that takes one or more input, performs some task and creates one or more output.

The input is never to be overwritten by a filter and an entirely new object should be created by a filter.

ApplyColormapFilter

An instance of ApplyColormapFilter applies a colormap on a chose channel (aka. component) of an Image2D.

Several optional metadata are available to tune the end result:

.setMetadata("style", xxx: String);see the complete list at http://www.pixpipe.io/pixpipejs/examples/colormap.html . Default is "jet".setMetadata("flip", xxx; Bolean );a fliped colormap reverses its style. Default: false.setMetadata("min", xxx: Number );and.setMetadata("max", xxx: Number );if specified, will replace the min and max of the input image. This can be used to enhance of lower the contrastsetMetadata("clusters", xxx: Number );The number of color clusters. If not-null, this will turn a smooth gradient into a set of xxx iso levels of color. Default: null.setMetadata("component", xxx: Number )The component to use on the input image to perform the colormapping. Default: 0

This filter requires an Image2D as input 0 and output a 3-components RGB Image2D of the same size as the input.

Usage

Extends ImageToImageFilter

BandPassSignal1D

Extends Filter

ContourHolesImage2DFilter

Extends Filter

ContourImage2DFilter

An instance of ContourImage2DFilter takes a seed (.setMetadata("seed", [x, y]))

and finds the contour of the shape of a segmented image by going north.

The input must be an Image2D and the output is a LineString.

Two options are availble for neighbour connexity: 4 or 8. Set this option using

.setMetadata("connexity", n).

Usage

Extends Filter

CropImageFilter

An instance of CropImageFilter is used to crop an Image2D. This filter accepts

a single input, using .addInput( myImage ), then, it requires a top left point

that must be set with .setMetadata( "x", Number) and .setMetadata( "y", Number).

In addition, you must specify the width and heigth of the output using

.setMetadata( "w", Number) and .setMetadata( "h", Number).

Usage

Extends ImageToImageFilter

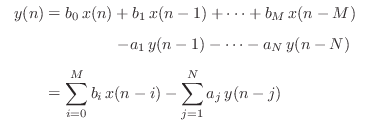

DifferenceEquationSignal1D

Performs a difference equation (= discrete version of a differential equation)

on a Signal1D object. This is convenient to perform a lo-pass or hi-pass filter.

Coefficients are needed to run this filter, set them using

the following methods: .setMetadata("coefficientsB", [Number, Number, ...]) and

.setMetadata("coefficientsB", [Number, Number, ...]). This is related to the

following:

Where coeefticients A and B are array of the same size, knowing the first number

of the array coefficients A will not be used (just set it to

Where coeefticients A and B are array of the same size, knowing the first number

of the array coefficients A will not be used (just set it to 1.0).

more information on the module repo

and even more on the original description page.

Usage

Extends Filter

FloodFillImageFilter

A FloodFillImageFilter instance takes an Image2D as input and gives a Image2D

as output.

The starting point of the flood (seed) has to be set using .setMetadata("seed", [x, y])

where x and y are winthin the boundaries of the image.

The tolerance can also be set using .setMetadata("tolerance", n).

The tolerance is an absolute average over each component per pixel.

Neighbour connexity can be 4 or 8 using .setMetadata("connexity", n).

Destination color can be set with .setMetadata("color", [r, g, b]).

The color array depends on your input image and can be of size 1 (intensity),

3 (RGB), 4 (RBGA) or other if multispectral.

In addition to the output image, the list of internal hit points is created and

availble with .getOutput("hits").

Usage

Extends ImageToImageFilter

ForEachPixelImageFilter

A filter of type ForEachPixelImageFilter can perform a operation on evey pixel of an Image2D with a simple interface. For this purpose, a per-pixel-callback must be specified using method .on( "pixel" , function( coord, color ){ ... }) where coord is of form {x, y} and color is of form [r, g, b, a] (with possibly) a different number of components per pixel. This callback must return, or null (original color not modified), or a array of color (same dimension as the one in arguments).

Usage

Extends ImageToImageFilter

var forEachPixelFilter = new pixpipe.ForEachPixelImageFilter();

forEachPixelFilter.on( "pixel", function(position, color){

return [

color[1], // red (takes the values from green)

color[0], // green (takes the values from red)

color[2] * 0.5, // blue get 50% darker

255 // alpha, at max

]

}

);

Run the filter

ForEachPixelReadOnlyFilter

This Filter is a bit special in a sense that it does not output anything. It takes an Image2D as output "0" and the event "pixel" must be defined, with a callback taking two arguments: the position as an object {x: Number, y: Number} and the color as an array, ie. [Number, Number, Number] for an RGB image.

This filter is convenient for computing statistics or for anything where the output is mannually created ( because the filter ForEachPixelImageFilter creates an output with same number of band.)

Usage

Extends Filter

GradientImageFilter

An instance of GradientImageFilter takes 2 input Image2D: a derivative in x, with the category "dx" and a derivative in y with the category "dy". They must be the same size and have the same number of components per pixel.

Usage

Extends ImageToImageFilter

HighPassSignal1D

Extends Filter

IDWSparseInterpolationImageFilter

An instance of IDWSparseInterpolationImageFilter performs a 2D interpolation from a sparse dataset using the method of Inverse Distance Weighting.

The original dataset is specified using the method .addInput( seeds ), where

seeds is an Array of {x: Number, y: Number, value: Number}. You specify

the seeds with the methodd .addInput( seeds , "seeds" );.

This filter outputs an Image2D with interpolated values. The size of the output must be

specified using the method .setMetadata( "outputSize", {width: Number, height: Number}).

The IDW algorithm can be tuned with a "strength", which is essentially the value

of exponent of the distances. Default is 2 but it is common the see a value

of 1 or 3. With higher values, the output will look like a cells pattern.

The strength can be defined using the method .setMetadata( "strength", Number )

The metadata "k" specifies the number of closest neighbors seed to consider for each

pixel of the output. If larger than the number of seeds, it will be automatically

clamped to the number of seeds. Set "k" with .setMetadata( "k", Number )

To make the interpolation faster when done several times with seed of

the same position but different values, a distance map is built at the begining.

The map that is firstly built will be reuse unless the metadata 'forceBuildMap'

is set to 'true'. If true, the map will be rebuilt at every run. It can take a

while so make sure you rebuild the map only if you need (= seed changed position,

output image changed size). Use the method .setMetadata( "forceBuildMap", Boolean )

Note 1: seeds can be outside the boundaries of the original image Note 2: interpolated values are floating point

Note that only single-component images are outputed from this filter. Ressources: https://www.e-education.psu.edu/geog486/node/1877

Usage

Extends Filter

Image3DToMosaicFilter

An instance of Image3DToMosaicFilter takes an Image3D as Input and output a

mosaic composed of each slice. The axis: "x", "y" or "z" can be

specified with setMetadata("axis", "x"), the default being x.

The default output image is 4096x4096 but these boundaries can be changed using

setMetadata("maxWidth", n) and setMetadata("maxHeight", m).

These are boundaries so the size of the output image(s) will possibly be lower

to not contain unused space.

If mosaicing the whole given Image3D does not fit in maxWidth*maxHeight, more

Image2D will be created and accessible through getOutput(n).

All output image have the same size so that the last one may have dead space.

To know precisely the size of the output mosaic use getMetadata("gridWidth")

and getMetadata("gridHeight"), this will give the number of slices used in

horizontal and vertical respectively.

By setting the time metadata we can get a mosaic at a given time position,

the default being 0. If set to -1, then the filter outputs the whole time

series.

Usage

Extends Filter

ImageBlendExpressionFilter

An instance of ImageBlendExpressionFilter takes Image2D inputs, as many as

we need as long as they have the same size and the same number of components

per pixel.

This filter blends images pixel values using a literal expression. This expression

should be set using setMetadata( "expresssion", "A * B" ) , where A and B

are the categories set in input.

Using a blending expression is the aesiest way to test a blending but it is a pretty slow process since the expresion has to be evaluated for every process. To speed-up your process, it is recomended to develop a new filter that does exactly (and only) the blending method you want.

usage

Extends ImageToImageFilter

ImageDerivativeFilter

A ImageDerivativeFilter filter will compute the dx and dy derivative using the filters h = [1, -1]

You can change the built-in filters that perform the derivative by setting the metadata

"dxFilter" and "dyFilter" with the method .setMetadata(). See the documentation of

SpatialConvolutionFilter to make your custom filter compatible.

Usage

Extends ImageToImageFilter

LowPassFreqSignal1D

An object of type LowPassFreqSignal1D perform a low pass in the frequency domain, which means the input Signal1D object must already be in the frequency domain. This filter requires 2 inputs:

- the

realpart of the Fourier transform output as aSignal1D. To be set with with.addInput("real", Signal1D) - the

imaginarypart of the Fourier transform output as aSignal1D. To be set with with.addInput("imaginary", Signal1D)

In addition to data, few metada can be used:

- mandatory the

cutoffFrequencyin Hz using the method.setMetadata("cutoffFrequency", Number)- cannot be higher than half of the sampling frequency (cf. Nyquist) - optional the

filterTypein Hz using the method.setMetadata("filterType", String)can begaussianorrectangular(default:gaussian) - optional the

gaussianTolerancein Hz using the method.setMetadata("gaussianTolerance", Number)value (frequency response) under which we want to use 0 instead of the actual gaussian value. Should be small. (default: 0.01)

Note: the filter type rectangular should be used with caution because it simply thresholds

the frequency spectrum on a given range. When transformed back to the time domain, this is

likely to produce artifact waves due to Gibbs phenomenon.

- Usage

Extends Filter

LowPassSignal1D

Extends Filter

Mesh3DToVolumetricHullFilter

An instance of Mesh3DToVolumetricHullFilter creates a voxel based volume (Image3D) of a Mesh3D (input). The hull of the mesh is represnted by voxels in the output.

Extends Filter

MultiplyImageFilter

Multiply two Image2D pixel by pixel. They must have the same number of components per pixel

and the same size.

Output an new Image3D.

Equivalent to SpectralScaleImageFilter.

Usage

Extends ImageToImageFilter

NaturalNeighborSparseInterpolationImageFilter

Inputs are alternatively

- "seeds" , an array of seeds

- "samplingMap"

As metadata:

- "outputSize" as {width: Number, height: Number}

- "samplingMapOnly" as a boolean. If true generated only the sampling map, if false, generate the sampling map and the output image

As output:

- "0" or not arg, the output image

- "samplingMap", the sampling map. Only available if "samplingMap" is not already as input

Extends Filter

NearestNeighborSparseInterpolationImageFilter

With a given set of seeds ( each being {x: Number, y: Number, value: Number} ) An instance of NearestNeighborSparseInterpolationImageFilter creates an image where each value is the closest from the given point.

The original seeds must be given as an Array of Object using

the method .addInput( seeds, "seeds")

The output image size must be set using the method

.setMetadata( "outputSize", {width: Number, height: Number})

The given point can be outside the output image boundaries.

Usage

Extends Filter

NormalizeImageFilter

A NormalizeImageFilter instance takes an Image2D as input and outputs an Image2D. The output images will have values in [0.0, 1.0]. One of the usage is that is can then be used as a scaling function.

The max value to normalize with will be the max value of the input image (among all components)

but an manual max value can be given to this filter using .setMetadata("max", m).

Usage

Extends ImageToImageFilter

PatchImageFilter

A instance of PatchImageFilter will copy an Image2D into another at a given position. The same process can be repeated mutiple times so that the output is the result of several patched applied on a image with a solid color background.

Usage

Extends ImageToImageFilter

SimpleThresholdFilter

An instance of SimpleThresholdFilter perform a threshold on an input image.

The input must be an Image2D with 1, 3 or 4 bands.

The default threshold can be changed using .setMetadata("threshold", 128)

and the low and high value can be replaced using .setMetadata("lowValue", 0)

and .setMetadata("highValue", 255). In addition, in case of dealing with a

RGBA image, you can decide of preserving the alpha channel or not, using

.setMetadata("preserveAlpha", true).

Usage

Extends ImageToImageFilter

SimplifyLineStringFilter

An instance of SimplifyLineStringFilter takes a LineString and simplifies upon a given tolerance distance (in pixel, possibly being sub-pixel). This filter outputs another LineString with a fewer amount of points.

Usage

Extends Filter

SpatialConvolutionFilter

An instance of SpatialConvolutionFilter perform a convolution in a spatial reference, this can be applying a Sobel filter, a median or gaussian blur or perform a derivative. The filter is a NxM (aka. an array of arrays) of the following form:

var medianBlurFilter = [

[1/9, 1/9, 1/9],

[1/9, 1/9, 1/9],

[1/9, 1/9, 1/9],

];

For example, in the case of a simple derivative, it will be like that:

var dx = [

[1, -1]

];

// or

var dy = [

[1],

[-1]

];

The filter must be specified using the method .setMetadata('filter', ...).

Usage

Extends ImageToImageFilter

SpectralScaleImageFilter

Multiply an image by the other, like a scaling function.

The image requires two inputs named "0" and "1".

Simply use addInput( myImg1, "0" )

and addInput( myImg2, "1" ). The input "0" can have 1 or more bands while

the input "1" can have only one band since the same scale is apply to each band.

Usage

Extends ImageToImageFilter

Run the filter

TerrainRgbToElevationImageFilter

This filter's purpose is to convert Mapbox's TerrainRGB image data into monochannel elevation (in meter). See more info about the format here: https://www.mapbox.com/blog/terrain-rgb/ The filter takes an Image2D that respect Mapbox's format (can be a result of stictching tiles together) and output a single component image with possibly up to 16777216 different values.

Usage

Extends ImageToImageFilter

TriangulationSparseInterpolationImageFilter

An instance of TriangulationSparseInterpolationImageFilter performs a triangulation

of an original dataset followed by a barycentric 2D interpolation. It is used to

perform a 2D linear interpolation of a sparse dataset.

The original dataset is specified using the method .addInput( seeds, "seeds" ), where

seeds is an Array of {x: Number, y: Number, value: Number}.

The triangulation is the result of a Delaunay triangulation.

This filter outputs an Image2D with interpolated values only within the boundaries

of the convex hull created by the triangulation. The size of the output must be

specified using the method .setMetadata( "outputSize", {width: Number, height: Number}).

Note 1: at least 3 unaligned points are required to perform a triangulation Note 2: points can be outside the boundaries of the original image Note 3: interpolated values are floating point

Note that only single-component images are outputed from this filter.

Usage

Extends Filter

_HELPER

Helpers are here to help.

AngleToHueWheelHelper

AngleToHueWheelHelper has for goal to help visualize angular data such as gradient

orientation. The idea behind the "hue wheel" is to associate every direction (angle)

to a color without having the 0/360 interuption.

The helper takes one Image2D input and gives one RGBA Image2D output. From the output,

the index of the compnent that contains angular information has to be given using:

.setMetadata("component", n) where n by default is 0.

Depending on the usage of this filter, the range of angle can varry,

ie. in [0, 2PI] (the default), or in [-PI/2, PI/2] (in the case of a gradient)

or even in degrees [0, 360]. In any case, use .setMetadata("minAngle", ...)

and .setMetadata("maxAngle", ...).

If the metadata "minAngle" or "maxAngle" is given the value "auto", then the min and max

values of the image will be looked-up (or computed if not defined).

Usage

Extends ImageToImageFilter

A part of this code was borrowed from github.com/netbeast/colorsys and modified.

(any)

(any

= 100)

(any

= 100)

ColorScales

From https://github.com/bpostlethwaite/colormap

Colormap

A Colormap instance is a range of color and can be used in two ways. The first,

is by getting a single color using .getValueAt(p) where p is a position in [0, 1] and, second,

by building en entire LUT with a given granularity and then getting back these values.

In case of intensive use (ie. applying fake colors), building a LUT is a faster option.

Once a LUT is built, an image of this LUT can be created (horizontal or vertical, flipped or not).

The image will be flipped is the flip matadata is set to true;

This image, which is an Image2D is not supposed to be used as a LUT but just as a visual reference.

Usage

Extends PixpipeObject

(Object

= {})

here is the list of options:

style {String} - one of the available styles (see property names in ColorScales.js)

description {Object} - colormap description like in ColorScales.js. Can also be the equivalent JSON string.

lutSize {Number} - Number of samples to pregenerate a LUT

Note: "style" and "description" are mutually exclusive and "style" has the priority in case both are set.

Creates a horizontal RGB Image2D of the colormap. The height is 1px and

the width is the size of the LUT currently in use.

A RGBA image can be created when passing the argument forceRGBA to true.

In this case, the alpha channel is 255.

The image can be horizontally flipped when the "flip" metadata is true

(Boolean

= false)

forces the creation of a RGBA image instead of a RGB image

Image2D:

the result image

Creates a vertical RGB Image2D of the colormap. The height is 1px and the width is the size of the LUT currently in use. The image can be vertically flipped when the "flip" metadata is true

(Boolean

= false)

forces the creation of a RGBA image instead of a RGB image

Image2D:

the result image

LineStringPrinterOnImage2DHelper

A instance of LineStringPrinterOnImage2DHelper prints a list of LineStrings on

an Image2D. To add the Image2D input, use .addInput(myImage2D).

To add a LineString, use .addLineString(ls, c ); where ls is a LineString

instance and c is an Array representing a color (i.e. [255, 0, 0] for red).

Usage

Extends ImageToImageFilter

Add a LineString instance to be printed on the image

(LineString)

a linestring to add

(Array)

of for

[

R, G, B

]

or

[

R, G, B, A

]

_IO

the input/output are special kinds of Filter objects. they read or write file from a server or from disk.

BrowserDownloadBuffer

An instance of BrowserDownloadBuffer takes an ArrayBuffer as input and triggers

a download when update() is called. This is for browser only!

A filename must be specified using .setMetadata( "filename", "myFile.ext" ).

Extends Filter

CanvasImageWriter

CanvasImageWriter is a filter to output an instance of Image into a

HTML5 canvas element.

The metadata "parentDivID" has to be set using setMetadata("parentDivID", "whatever")

The metadata "alpha", if true, enable transparency. Default: false.

If the input Image2D has values not in [0, 255], you can remap/stretch using

setMetadata("min", xxx ) default: 0

setMetadata("max", xxx ) default: 255

We can also use setMetadata("reset", false) so that we can add another canvas

with a new image at update.

Usage

Extends Filter

(String)

dom id of the future canvas' parent.

(most likely the ID of a div)

// create an image

var myImage = new pixpipe.Image2D({width: 100, height: 250, color: [255, 128, 64, 255]})

// create a filter to write the image into a canvas

var imageToCanvasFilter = new pixpipe.CanvasImageWriter( "myDiv" );

imageToCanvasFilter.addInput( myImage );

imageToCanvasFilter.update();

FileImageReader

An instance of FileImageReader takes a HTML5 File object of a png or jpeg image

as input and returns an Image2D as output. For Tiff format, use TiffDecoder instead.

The point is mainly to use it with a file dialog.

Use the regular addInput() and getOuput() with no argument for that.

Reading a local file is an asynchronous process. For this

reason, what happens next, once the Image2D is created must take place in the

callback defined by the event .on("ready", function(){ ... }).

Usage

Extends Filter

var file2ImgFilter = new pixpipe.file2ImgFilter( ... );

file2ImgFilter.addInput( fileInput.files[0] );

file2ImgFilter.update();

FileToArrayBufferReader

Takes the File inputs from a HTML input of type "file" (aka. a file dialog), and reads it as a ArrayBuffer.

Every File given in input should be added separately using addInput( file[i], 'uniqueID' ).

The event "ready" must be set up ( using .on("ready", function(){}) ) and will

be triggered when all the files given in input are translated into ArrayBuffers.

Once ready, all the outputs are accecible using the same uniqueID with the

method getOutput("uniqueID").

Gzip compressed files will be uncompressed.

Once the filter is updated, you can query the filenames metadata (sorted by categories)

and also the checksums metadata using .getMetadata(). This later metadata

give a unique md5, very convenient to compare if two files are actually the same.

Note that in case the file is gziped, the checksum is computed on the raw file,

not on the un-gziped buffer.

It happens that a file is not binary but text, then, set the metadata "readAsText" to true.

Usage

Extends Filter

UrlImageReader

An instance of UrlImageReader takes an image URL to jpeg or png as input and

returns an Image2D as output. Use the regular addInput() and getOuput()

with no argument for that. For Tiff format, use TiffDecoder instead.

Reading a file from URL takes an AJAX request, which is asynchronous. For this

reason, what happens next, once the Image2D is created must take place in the

callback defined by the event .on("ready", function(){ ... }).

Usage: examples/urlToImage2D.html

UrlImageReader can also load multiple images and call the "ready" event only when all of them are loaded.

Usage

Extends Filter

(function)

function to call when the image is loaded.

The

this

object will be in argument of this callback.

var url2ImgFilter = new pixpipe.UrlImageReader( ... );

url2ImgFilter.addInput( "images/sd.jpg" );

url2ImgFilter.update();

Overload the function

UrlToArrayBufferReader

Open a files as ArrayBuffer using their URL. You must specify one or several URL

(String) using addInput("...") and add function to the event "ready" using

.on( "ready", function(filter){ ... }).

The "ready" event will be called only when all input are loaded.

Gzip compressed files will be uncompressed.

Once the filter is updated, you can query the filenames metadata (sorted by categories)

and also the checksums metadata using .getMetadata(). This later metadata

give a unique md5, very convenient to compare if two files are actually the same.

Note that in case the file is gziped, the checksum is computed on the raw file,

not on the un-gziped buffer.

It happens that a file is not binary but text, then, set the metadata "readAsText" to true.

Usage

Extends Filter

_UTILS

The Utils are usually not respecting the inheritance schema provided by Pixpipe because they are neither data structure, nor filters. They just provide some small feature that can be used by multiple PixpipeObjects.

FunctionGenerator

The FunctionGenerator is a collection of static methods to get samples of function output such as gaussian values.

Get the value of the gaussian values given a interval and a sigma (standard deviation).

Note that this gaussian is the one use in a frequency response. If you look

for the gaussian as an impulse response, check the method

gaussianImpulseResponse.

(Number)

the standard deviation (or cutoff frequency)

(Number)

the first value on abscisse

(Number)

the last value on abscisse (included)

(any

= 1)

(Number

= 0)

threshold, takes only gaussian values above this value

Object:

.data: Float32Array - the gaussian data,

.actualBegin: like begin, except if the onlyAbove is used and the squence would start later

.actualEnd: like end, except if the onlyAbove is used and the squence would stop earlier

Get the value of the gaussian given a x and a sigma (standard deviation).

Note that this gaussian is the one use in a frequency response. If you look

for the gaussian as an impulse response, check the method

gaussianImpulseResponseSingle.

Number:

the gaussian value at x for the given sigma

MatrixTricks

MatrixTricks contains only static functions that add features to glMatrix. Like in glMatrix, all the matrices arrays are expected to be column major.

Expand a 3x3 matrix into a 4x4 matrix. Does not alter the input.

(Array)

3x3 matrix in a 1D Array

[

9

]

arranged as column-major

(String

= "LEFT")

"LEFT" to stick the 3x3 matrix at the left of the 4x4, "RIGHT" to stick to the right

(String

= "TOP")

"TOP" to stick the 3x3 matrix at the top of the 4x4, "BOTTOM" to stick to the bottom

Array:

the flipped 4x4 matrix in a 1D Array

[

16

]

arranged as column-major

Get a value in the matrix, at a given row/col position.

(Array)

nxn matrix in a 1D Array

[

nxn

]

arranged as column-major

(Number)

size of a side, 4 for a 4x4 or 3 for a 3x3 matrix

(Number)

position in column (x)

(Number)

position in row (y)

Number:

value in the matrix

Set a value in the matrix, at a given row/col position

Set a value in the matrix, at a given row/col position

_misc

convertImage3DMetadata

Converts the original Image3D metadata into the new

(any)

swapn

[STATIC] swap the data to be used from the outside (ie. nifti)

(any)

(any)

transformToMinc

[STATIC] mainly used by the ouside world (like from Nifti)

(any)

(any)

ForwardFourierImageFilter

An instance of ForwardFourierImageFilter performs a forward Fourier transform on an Image2D or a Signa1D.

Usage

Extends BaseFourierImageFilter

ignore_offsets

This is here because there are two different ways of interpreting the origin of an MGH file. One can ignore the offsets in the transform, using the centre of the voxel grid. Or you can correct these naive grid centres using the values stored in the transform. The first approach is what is used by surface files, so to get them to register nicely, we want ignore_offsets to be true. However, getting volumetric files to register correctly implies setting ignore_offsets to false.

InverseFourierImageFilter

An instance of ForwardFourierImageFilter performs an inverse Fourier transform on an Image2D or a Signa1D.

Usage

Extends BaseFourierImageFilter